Vocab Activity User Testing

Challenge: Get the insights that help create a better overall user experience for the vocabulary activities in the Portales 2.0 program in VHL Central.

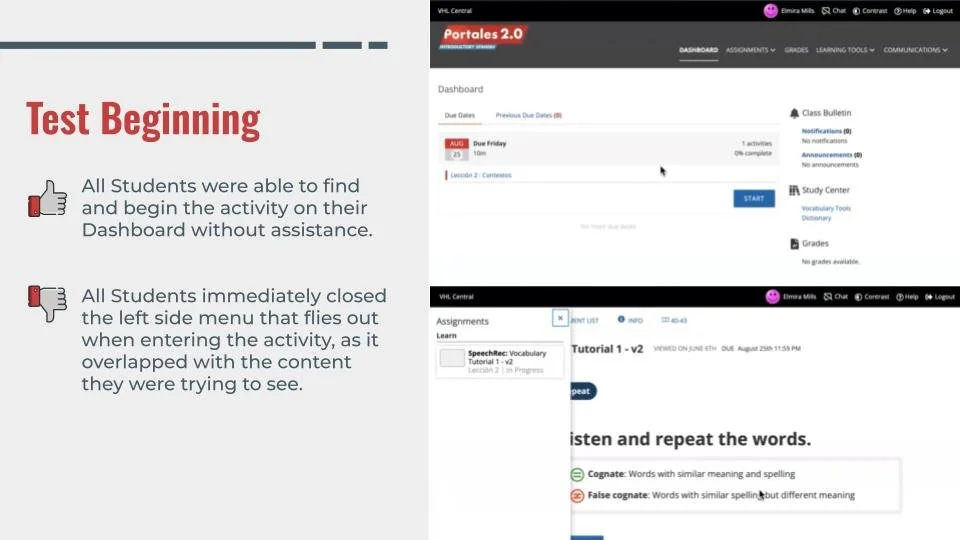

Background: As new programs are planned, information from existing programs can be used to improve them. One such program to reflect upon is Portales 2.0. For this project, I conducted user testing on the vocabulary tutorial in Portales 2.0 to validate the design, find any issues with workflows, and gather unbiased user opinions.

Process: We targeted higher ed language students, 18-25 years old. The test was conducted on the desktop experience, and used the development version of the activity to simulate the overall experience. I developed a set of pre-test demographic questions, testing tasks, and follow up questions after the test was completed (listed below). The testing was fully-remote and moderated. We tracked the device, browser, and operating system for each student.

Implementation: Ten total users completed the testing process, which were all done using Zoom with video and audio on and screen sharing. Tested included four adult students, and six first and second year college students. Eight were current VHL users and two were non-VHL users. All had some familiarity with Spanish, either from high school or college classes.

Screening Questions:

What is your name and grade level?

What languages do you study?

What devices and browser do you use when using VHL Central?

Do you use your phone when completing assignments? What type of phone is it?

Have you ever used the vocabulary tutorials in VHL Central?

Testing Tasks:

You’re completing your assigned homework for class. Find your first assignment.

Before beginning, set the speed to your desired pace.

Complete the first part of the tutorial.

What did you think of the Listen and Repeat experience?

Can you tell me what you think of the pace?

Complete the second part of the tutorial.

What did you think of the Match experience?

Did you know which image was correct or incorrect? How?

Complete the third part of the tutorial.

What did you think of the Say It experience?

I noticed you used/did not use Manual Mode, why did you choose that?

I noticed you used/did not use Speech Recognition, why did you choose that?

Review your results of the tutorial. What action would you take next?

Follow-up Questions:

What was your overall impression of the vocabulary tutorial?

What was the best and the worst thing about this activity?

Overall, can you tell me what you think of the length of the activity?

Overall, can you tell me what you think of the speed of the activity?

How would you compare the vocabulary tutorial to other activities in VHL Central?

Any additional feedback?

Sample of full report shared with stakeholders post-testing.

Results: Following testing completion, I summarized all the findings and presented my top recommendations to the internal teams (shown below). These findings were used to update the activity and will also influence the next iteration or release of this activity in VHL Central. In addition to these recommendations, we also uncovered a browser-specific bug that the engineers were able to address before the activity was released.

Top Recommendations:

Provide additional navigation within the activity. Students who experienced the manual mode by default overall seemed to have a better experience and appeared to enjoy being in control. The addition of a back button would be helpful as well.

Add a way to redo only the words that were missed during the tutorial, rather than being forced to repeat the entirety of each section.

Give additional guidance before each section of the tutorial on what to expect and how to be successful in that part of the activity.

Show additional information on what the different settings mean (speed controls, manual mode, and speech recognition).

Display more detailed feedback during Speech Recognition. Rather than right or wrong, could there be suggestions for improvement. Possibly allow students to replay the recordings to hear themselves speaking in comparison to native speakers.

Update the Results page to reflect more accurately how students performed. Currently, only Say It is taken into account. Students who got 100% of Match correct were told they missed words on the results screen.